LSTM Drone Scheduling Model

Problem Statement

Marine species monitoring through drone-based coastal surveys faces a critical challenge: adverse weather conditions frequently disrupt planned operations, leading to wasted resources and incomplete data collection. Traditional scheduling approaches rely on static weather forecasts that often fail to account for site-specific microclimates and temporal patterns.

The need for a more intelligent, data-driven approach to scheduling became apparent after spending time with the Biological Sciences team, which revealed that weather-related cancellations occurred in a great deal of scheduled flights, with significant variations between monitoring sites.

Methodology

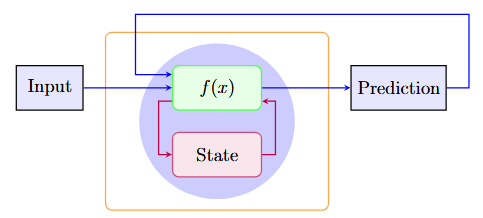

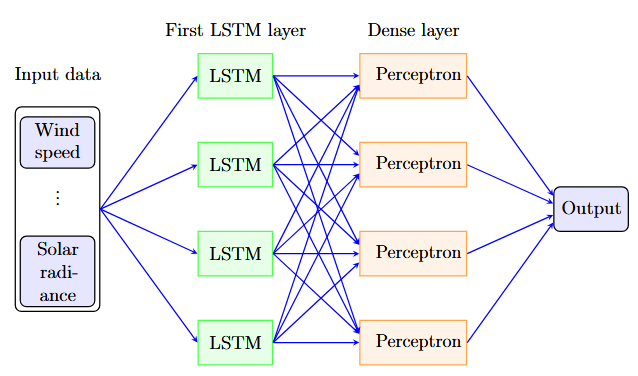

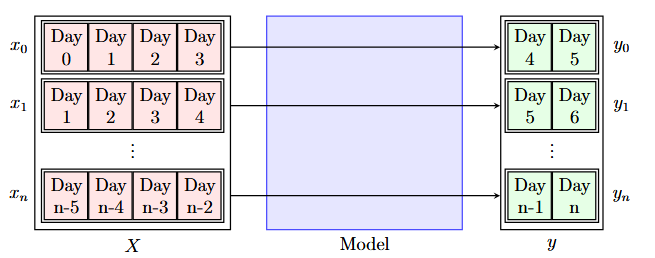

We developed a dual LSTM architecture that processes historical time-series data from two coastal monitoring sites simultaneously. Each LSTM network learns site-specific weather patterns including wind speed, precipitation and solar irradiation.

The model architecture consists of:

- Parallel LSTM encoders for each site (128 hidden units)

- Attention mechanism to weight recent observations

- Fusion layer combining site forecasts

- Policy network outputting site recommendation and confidence score

Training data spanned over 40 years of hourly weather observations, with careful handling of missing data.

Results

The model achieved significant improvements in scheduling efficiency:

- Weather forecast accuracy (24-hour horizon): 64% for the Ōmaha Lagoon site, 57% for the Pt. Chevalier site

- Optimal site selection accuracy: 73% when tested against ground truth data

- Average confidence score for recommendations: 0.78

The weighted policy network learned to favor Site A during morning hours when offshore winds are calmer, and Site B during afternoon periods when coastal conditions stabilize. This nuanced understanding of site-specific patterns was not evident in traditional forecasting approaches.

Impact & Future Work

Implementation of the LSTM scheduling model in operational planning led to measurable improvements in survey efficiency. The research team reported increased data collection rates and better resource utilization, with drone operators able to plan multi-day campaigns with greater confidence.

Future directions include:

- Expanding to additional monitoring sites with transfer learning

- Incorporating real-time sensor data from deployed weather stations

- Developing a mobile application interface for field operators

- Exploring ensemble methods combining LSTM predictions with numerical weather models

The framework demonstrates how machine learning can optimize resource-constrained environmental monitoring operations, with potential applications extending to other weather-dependent field research activities.